Table of Contents

Classification and regression trees (CART) may be a term used to describe decision tree algorithms that are used for classification and regression learning tasks.

CART was introduced in the year 1984 by Leo Breiman, Jerome Friedman, Richard Olshen and Charles Stone for regression task. It is additionally a predictive model which helps to seek out a variable supported other labelled variables. To be more clear the tree models predict the result by asking a group of if-else questions.

Two advantages of using tree model are as follows :

- They’re ready to capture the non-linearity within the data set.

- No need for standardisation of knowledge when using tree models.

What are Decision Trees?

If you strip it right down to the fundamentals, decision tree algorithms are nothing but if-else statements which will be wont to predict a result supported data.

Machine learning algorithms are often classified into two types- supervised and unsupervised. a choice tree may be a supervised machine learning algorithm. it’s a tree-like structure with its root node at the highest.

- The many names wont to describe the CART algorithm for machine learning.

- The representation employed by learned CART models that are stored on disk.

- How a CART model is often learned from training data.

- How a learned CART model is often wont to make predictions on unseen data.

- Additional resources that you simply can use to find out more about CART and related algorithms.

Decision Trees are commonly utilised in data processing with the target of making a model that predicts the worth of a target (or dependent variable) supported the values of several inputs (or independent variables).

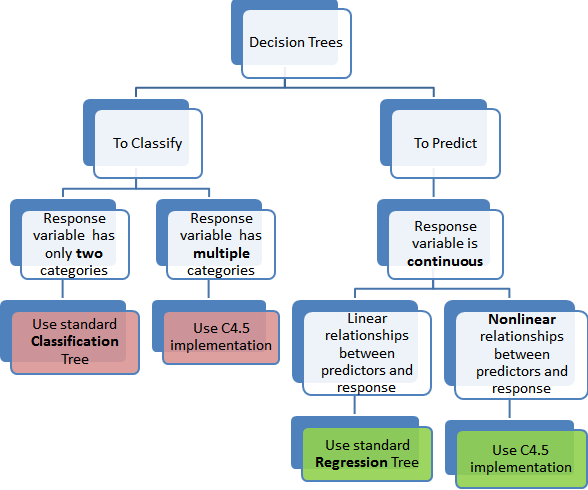

Classification and Regression Trees

The CART or Classification & Regression Trees methodology refers to those two sorts of decision trees. While there are many classification and regression trees tutorials, videos and classification and regression trees there may be a simple definition of the two sorts of decisions trees. It also includes classification and regression trees examples.

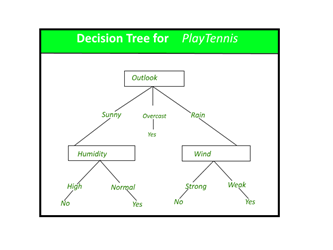

(i) Classification Trees

It is an algorithm where the target variable is always fixed or categorical. The algorithm is then used to identify the “class” within which a target variable would presumably fall into. An example of a classification-type problem would be determining who will or won’t subscribe to a digital platform; or who will or won’t graduate from high school.

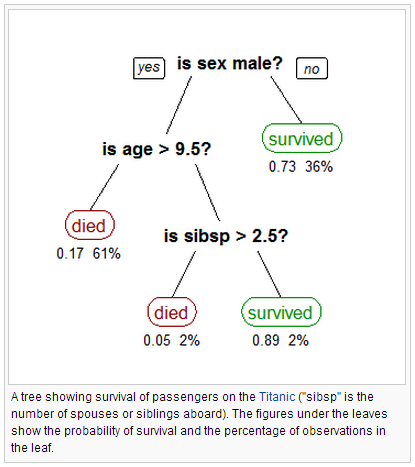

These are samples of simple binary classifications where the specific variable can assume just one of two, mutually exclusive values. In other cases, you would possibly need to predict among a variety of various variables. as an example, you’ll need to predict which sort of smartphone a consumer may plan to purchase. In such cases, there are multiple values for the specific variable. Here’s what a classic classification tree seems like.

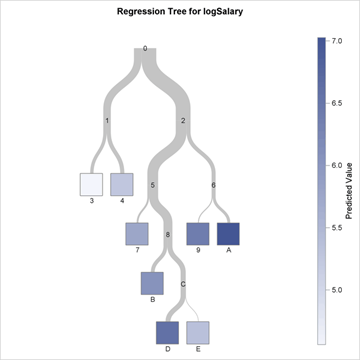

(ii) Regression Trees

A regression tree refers to an algorithm where the target variable is and therefore the algorithm is employed to predict it’s value. As an example of a regression type problem, you’ll want to predict the selling prices of a residential house, which may be a continuous variable.

This will depend upon both continuous factors like square footage also as categorical factors just like the sort of home, area during which the property is found then on.

Difference Between Classification and Regression Trees

Decision trees are easily understood and there are several classification and regression trees presentation to form things even simpler. However, it’s important to know that there are some fundamental differences between classification and regression trees.

When to use Classification and Regression Trees

Classification trees are mostly used when the dataset must be split into classes which is part of the response variable. In many cases, the classes Yes or No. In other words, they’re just two and mutually exclusive. In some cases, there could also be quite two classes during which case a variant of the classification tree algorithm is employed.

Regression trees, on the opposite hand, are used when the response variable is continuous. as an example, if the response variable is some things just like the price of a property or the temperature of the day, a regression tree is employed.

We can say that regression trees are used problems where prediction are used while classification trees are used for classification-type problems.

How Classification and Regression Trees Work

A classification tree splits the dataset supported the homogeneity of knowledge. Say, as an example, there are two variables; income and age; which determine whether or not a consumer will buy a specific quite phone.

If the training data shows that 95% of individuals who are older than 30 bought the phone, the info gets split there and age becomes a top node within the tree. This split makes the info “95% pure”. Measures of impurity like entropy or Gini index are wont to quantify the homogeneity of the info when it involves classification trees.

In a regression tree, a regression model is fit the target variable using each of the independent variables. After this, the info is split at several points for every experimental variable.

At each such point, the error between the anticipated values and actual values is squared to urge “A Sum of Squared Errors”(SSE). The SSE is compared across the variables and therefore the variable or point which has rock bottom SSE is chosen because of the split point. This process is sustained recursively.

The CART algorithm is structured as a sequence of questions, the answers to which determine what subsequent question, if any should be. The results of these questions may be a tree-like structure where the ends are terminal nodes at which point there are not any more questions.

An easy example of a choice tree is as follows:

The important elements of CART or any decision tree algorithm are:

- Rules for splitting data at a node supported the worth of one variable

- Stopping rules for deciding when a branch is terminal and may be split no more

CART Model Representation

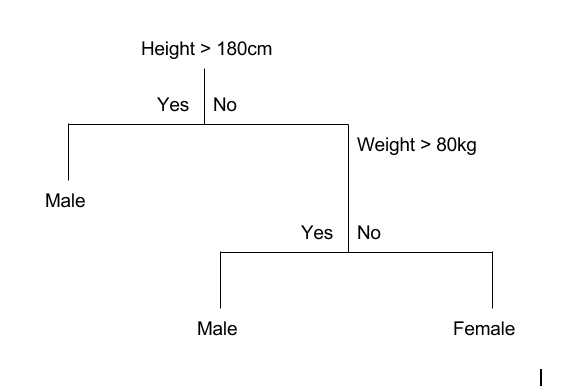

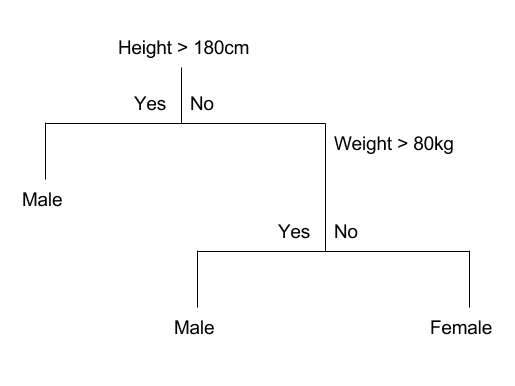

The representation for the CART model may be a binary tree. This is a binary tree which is formed from the algorithms and data structures which is nothing too fancy. Each root node represents one input variable (x) and a split point thereon variable (assuming the variable is numeric).

The leaf nodes of the tree contain an output variable (y) which is employed to form a prediction. Given a dataset with two inputs (x) of height in centimetres and weight in kilograms the output of sex as male or female, below may be an example of a binary decision tree which is completely fictitious for demonstration purposes only and is very important.

Advantages of Classification and Regression Trees

The purpose of the analysis conducted by any classification or regression tree is to make a group of if-else conditions that leave the accurate prediction or classification of a case. Classification and regression trees work to supply accurate predictions or predicted classifications, supported the set of if-else conditions. they typically have several advantages over regular decision trees.

(i) The Results are Simplistic

The interpretation of results summarised in classification or regression trees is typically fairly simple. The simplicity of results helps within the following ways.

- It allows for the rapid classification of the latest observations. That’s because it’s much simpler to gauge only one or two logical conditions than to compute scores using complex nonlinear equations for every group.

- It can often end in an easier model which explains why the observations are either classified or predicted during a certain way. as an example, business problems are much easier to elucidate with if-then statements than with complex nonlinear equations.

(ii) Classification and Regression Trees are one of the Nonparametric or distribution-free & Nonlinear.

The results from classification and regression trees are often summarized in simplistic if-then conditions. This negates the necessity for the subsequent implicit assumptions.

- The predictor variables and therefore the variable is linear.

- The predictor variables and therefore the variable follow some specific nonlinear link function.

- The predictor variables and therefore the variable is monotonic.

Since there’s no need for such implicit assumptions, classification and regression tree methods are compatible to data processing. this is often because there’s little or no knowledge or assumptions which will be made beforehand about how the various variables are related.

As a result, classification and regression trees can reveal relationships between these variables that might not are possible using other techniques.

Limitations of Classification and Regression Trees

Classification and regression tree tutorials, also as classification and regression tree ppts, exist in abundance. this is often a testament to the recognition of those decision trees and the way frequently they’re used. However, these decision trees aren’t without their disadvantages.

To master machine learning and explore more about Machine Learning, visit our blog page.

By Madhav Sabbarwal

Leave a Reply